Install the dependencies to the system.

This allows for the packages to be found for docker, nix, and vcpkg.

Details

Diff Detail

- Repository

- rCOMM Comm

- Lint

Lint Not Applicable - Unit

Tests Not Applicable

Event Timeline

@atul do you know of a good way to make changes to the base-image and have it reflected in the CI?

Currently the build is failing because all of the services are still checking out commapp/services-base:1.2 https://buildkite.com/comm/backup-build-docker/builds/990#018238c8-a54c-4769-a73b-494e24e1fcb2

These should be (latest was locally built):

commapp/services-base latest ae613c79979f 3 days ago 1.64GB commapp/services-base 1.2 ae1db14d0f91 2 weeks ago 1.37GB

Think I responded (to what I thought) was equivalent question on Comm.

Let me know if there's something I'm missing/anything else I need to address

| services/base-image/docker/install_glog.sh | ||

|---|---|---|

| 10 ↗ | (On Diff #15079) | Hm, the choice of 4 seems kind of arbitrary here? @karol did the following in services/lib/docker/build_sources.sh: NPROC=0 NPROC=$(nproc 2> /dev/null || echo 1) if [[ $NPROC -eq 1 ]]; then NPROC=$(sysctl -n hw.physicalcpu 2> /dev/null || echo 1) fi echo "building the server (nproc=$NPROC)..." pushd cmake/build cmake ../.. make -j $NPROC to conditionally set -j based on the number of CPUs. Though maybe there's some nix determinism sort of reason to make sure it's always the same on every machine? If so I guess 4 is a reasonable choice for lowest common denominator number of cores for a physical machine... but should we maybe consider more resource constrained virtual environments (eg someone only gives docker vm 1 core)? |

| services/base-image/docker/install_grpc.sh | ||

|---|---|---|

| 9 ↗ | (On Diff #15079) | Should we add --single-branch flag to this git clone command like we're doing for the other dependencies? Realize this might be out of scope for this diff... but we can probably just sneak it in? |

At a high level this looks good.

However, I'm pretty concerned about the base-image in our repo and the base-image on DockerHub being out of sync.

Someone looking at the repo won't have an accurate picture of what base-image actually is which would make debugging/troubleshooting/etc pretty painful.

Can we point the FROM of the child Dockerfiles to the base-image in the repo? I think it should be handled in this diff so we can ensure that all the docker images in the repo continue working correctly and not out of coincidence based on whatever version of base-image is on DockerHub.

| services/base-image/docker/install_double.sh | ||

|---|---|---|

| 3 ↗ | (On Diff #15079) | If we're doing this instead of set -e we should change all the other installation scripts to be consistent (can be handled in a different diff). |

| services/base-image/docker/install_grpc.sh | ||

| 43–46 ↗ | (On Diff #15079) | I think this will be good with another proofreading pass. We should be consistent with capitalization (eg Ubuntu?), wrapping things with backticks, and punctuation (eg ending last sentence with period) |

Patched this diff and ran shellcheck locally and found no issues with shell scripts within services/base-image/docker

Though maybe there's some nix determinism sort of reason to make sure it's always the same on every machine? If so I guess 4 is a reasonable choice for lowest common denominator number of cores for a physical machine...

I could also just do make -j, which is almost the same as a make -j $(nproc)

| services/base-image/docker/install_double.sh | ||

|---|---|---|

| 3 ↗ | (On Diff #15079) | fair, these are usually the "minimal hardening" done for bash scripts in nix. |

| services/base-image/docker/install_glog.sh | ||

| 10 ↗ | (On Diff #15079) | 4 is arbitrary, I just used it because we were just invoking make which defaults to 1. And I got tired of the long build times |

Yeah if there are no issues with that seems like the way to go?

| services/base-image/docker/install_glog.sh | ||

|---|---|---|

| 10 ↗ | (On Diff #15079) |

Totally off topic but wonder if using CMake to generate ninja instead of Unix Makefile would provide any speedups. I think ninja has more of an impact for incremental builds vs. clean builds but maybe there's a low-effort speedup win there. |

we can, just need to do cmake .. -Gninja but I don't think we'll get too much benefit. If people want to use it for their personal workflow, then they would be able to.

But our c++ code base is relatively "small", we just have 3 services, 3 service utilities libraries, and ~6 projects in native/cpp; each project is usually just a handful of headers and maybe some source files. We essentially have a microservice version of a c++ codebase, that why we have so much integration pain :)

| services/base-image/docker/install_glog.sh | ||

|---|---|---|

| 10 ↗ | (On Diff #15079) | I mean, this is an ubuntu image, which has gnu core utils. We can assume that nproc will be available. |

| 10 ↗ | (On Diff #15079) | usage of nproc || sysctl -n hw.physicalcpu makes sense for a docker + darwin workflow, but these scripts will only be running in a docker flow. Also, once we fully move services to a fully nix workflow, we will never have to build glog for dev environment |

| services/base-image/docker/install_grpc.sh | ||

| 9 ↗ | (On Diff #15079) | probably... I'll just add it as a separate diff |

| 9 ↗ | (On Diff #15079) | |

| services/base-image/docker/install_double.sh | ||

|---|---|---|

| 3 ↗ | (On Diff #15079) | actually, to make this consistent is a little more tricky as some scripts make use of pipefail (e.g. nproc || echo 1), which will hard crash with set -o pipefail |

@atul, I decided to go with make -l$(nproc) -j as -j without any arguments is "create unlimited jobs", and -l$(nproc) is "don't create new jobs unless load average is below N".

This is the desired behavior because it will take in the system's current cpu capacity for job scheduling. When make launches a job it won't know how many cores it will use. Nix has a similar jobs vs cores separation.

Since all of these scripts are meant to be used with docker, and we are using linux images with GNU coreutils on them, we have strong guarantees that nproc will exist.

| services/base-image/docker/install_glog.sh | ||

|---|---|---|

| 10 ↗ | (On Diff #15079) | Ah ok, I wonder why we're determining NPROC manually in build_sources.sh then? Guessing it's probably because we were previously using Alpine Linux instead of Ubuntu or something (cc @karol) |

| services/base-image/docker/install_grpc.sh | ||

| 9 ↗ | (On Diff #15079) | Thanks |

I might also make it -j$(nproc), seems like the sampling makes it do bursts of creation and waiting

Minor changes (double-quoting).

| services/base-image/docker/install_aws_sdk.sh | ||

|---|---|---|

| 18 ↗ | (On Diff #15124) | |

| services/base-image/docker/install_double.sh | ||

| 11 ↗ | (On Diff #15124) | |

| services/base-image/docker/install_folly.sh | ||

| 13 ↗ | (On Diff #15124) | |

| services/base-image/docker/install_glog.sh | ||

| 10 ↗ | (On Diff #15124) | |

| services/base-image/docker/install_grpc.sh | ||

| 32 ↗ | (On Diff #15124) | |

| 43 ↗ | (On Diff #15124) | |

| 55 ↗ | (On Diff #15124) | |

Looks good after minor language tweaks and addressing @abosh's shellcheck feedback.

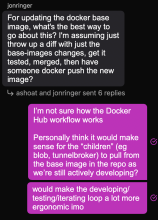

Can we test the "child" images (eg blob, tunnelbroker, backup) against this updated base image before landing? Would feel more comfortable landing this after some sort of confirmation, but understand if that would be prohibitively time consuming or whatever.

| services/base-image/docker/install_grpc.sh | ||

|---|---|---|

| 47–50 ↗ | (On Diff #15124) | |

Yup! Going to get it added to the CI today or tomorrow, so it'll automatically lint things. There's some problematic scripts in the codebase right now that are blocking adding ShellCheck to the full CI build. I'm manually fixing them, so these changes will help resolve some of those errors.

Can we test the "child" images (eg blob, tunnelbroker, backup) against this updated base image before landing? Would feel more comfortable landing this after some sort of confirmation, but understand if that would be prohibitively time consuming or whatever.

@atul yes, if you go to the last two diffs in this stack or the tunnelbroker diff, they make use of this workflow, but don't use docker. If you follow that test plan, you will have tested this